Making AI and information more Helpful, Humane, Harmless

Making Policy makers know the importance of ai safety

Learn MoreMaking Policy makers know the importance of ai safety

Learn MoreThe public policy on how these AI systems are developed will dictate how our future generations will use this technology.

Our objective is to advance the AI race, document organizational risks, find and mitigate rogue actors, and make explainability of AI models more accessible to the public sector.

We believe that having a clear understanding of internal workings of the neural nets is important and essential for the safe development of AI systems

“I’m increasingly inclined to think that there should be some regulatory oversight, maybe at the national and international level, just to make sure that we don’t do something very foolish. I mean with artificial intelligence we’re summoning the demon.” —Elon Musk, MIT’s AeroAstro Centennial Symposium

“I’m more frightened than interested by artificial intelligence – in fact, perhaps fright and interest are not far away from one another. Things can become real in your mind, you can be tricked, and you believe things you wouldn’t ordinarily. A world run by automatons doesn’t seem completely unrealistic anymore. It’s a bit chilling.”

—Gemma Whelan, the guardian

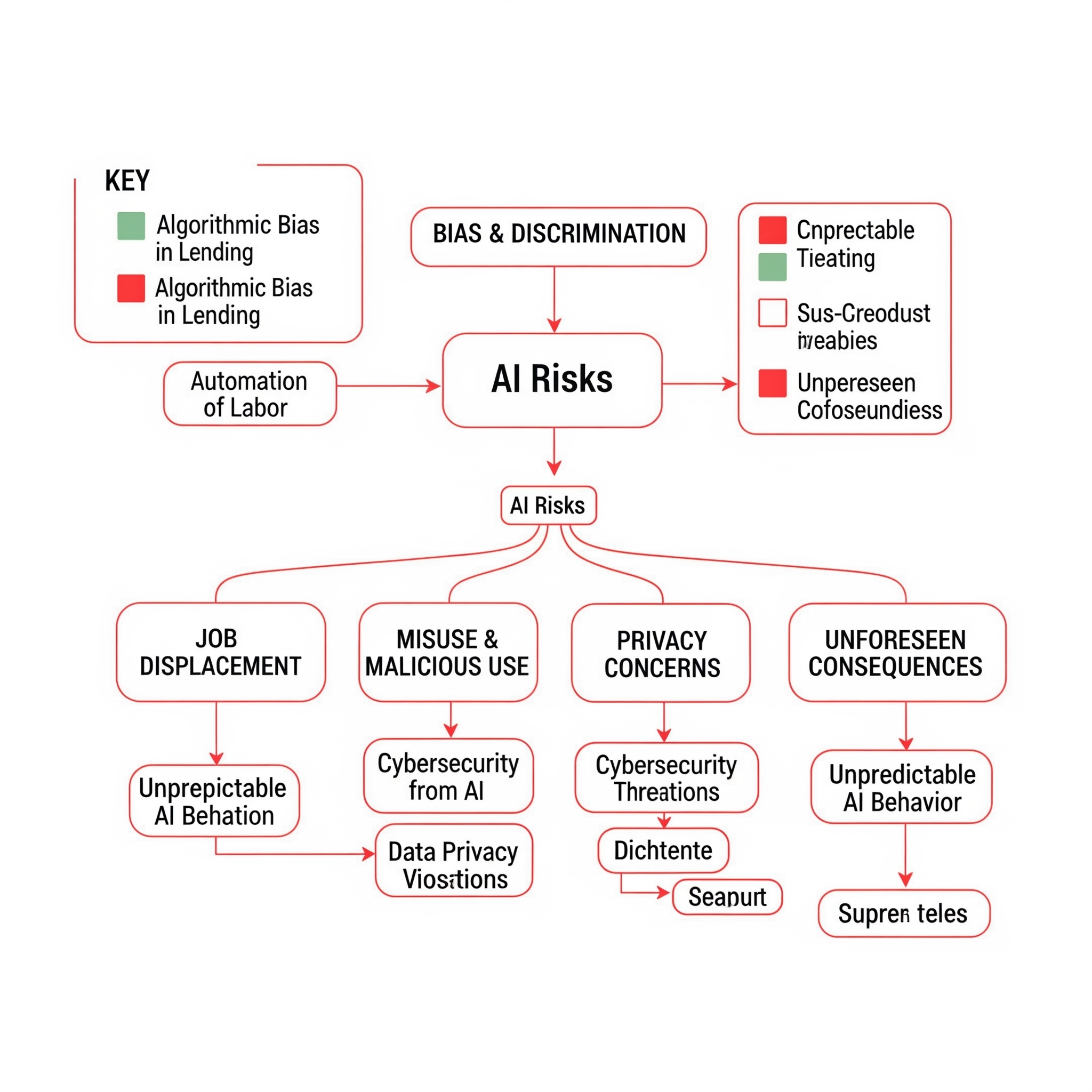

As AI systems become more agentic and increasingly interdependent, it becomes more difficult to align them with human values and intentions. This can lead to unintended consequences, such as harmful behaviors or misaligned goals.

AI systems have tremendous potential to be exploited by malicious actors. From adversarial attacks to model inversion, the risks are significant and complex. Consequences include autonomous vehicle failures, fraud, privacy violations, and more.

As AI systems advance, power dynamics will shift, creating monopolistic tendencies and increased persuasion for specific objectives.

The AI race drives innovation but risks deploying flawed systems, amplifying biases, privacy violations, and weapon misuse.

Most large-scale LLMs are trained on internet data, which is often factual but occasionally includes dishonest or biased responses. This can perpetuate misinformation and vulnerabilities in automated systems. We aim to develop large-scale datasets of misinformation to help LLM providers analyze, fine-tune, and mitigate risks, making systems more honest, helpful, and harmless.

Our mission is to ensure policymakers understand AI safety’s importance and implement policies to develop these systems securely.